DeepSeek and the Uncomfortable Truth About AI Progress

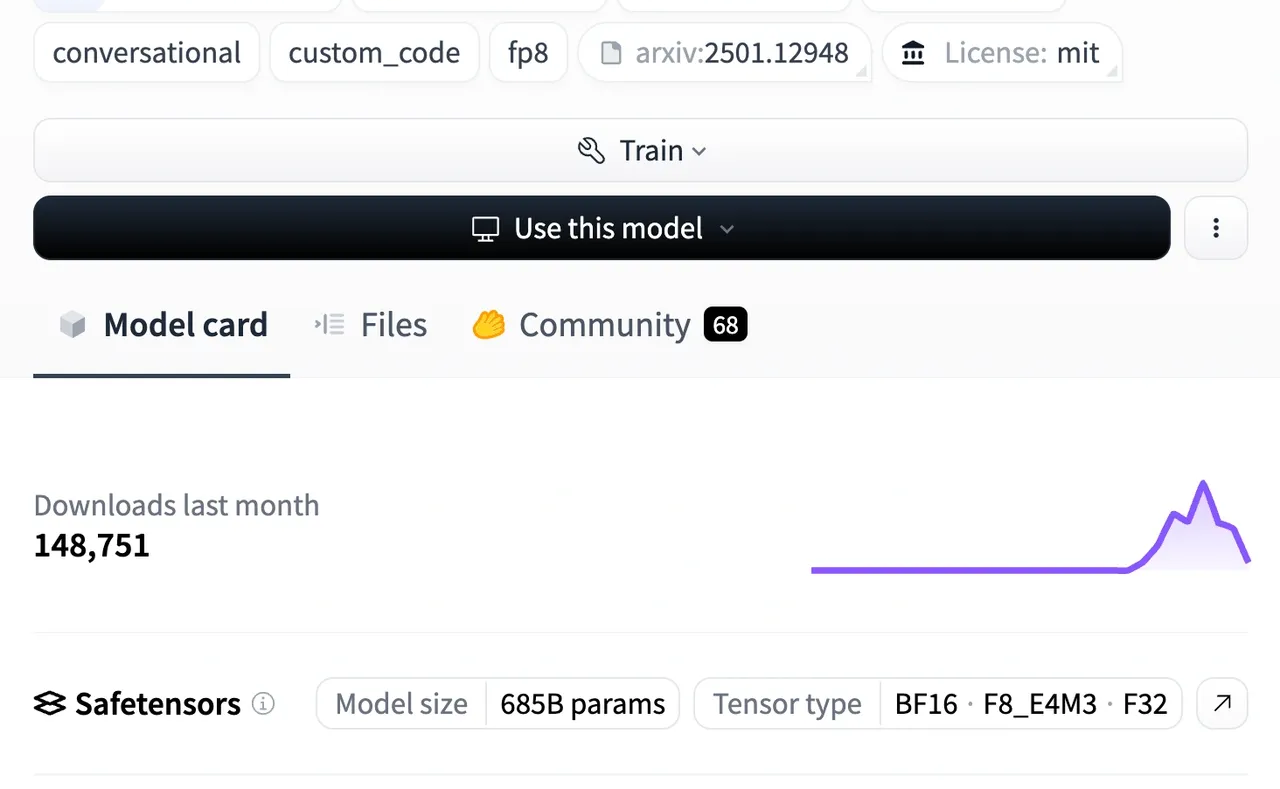

_Huggingface page for DeepSeek R1 8 days after release._

The AI industry thrives on disruption, but even by its standards, the rise of China's DeepSeek R1 has sparked existential dread. When a model outperforms OpenAI's flagship GPT-o1 at a fraction of the cost—while being open-source—it's easy to see why panic ensued (TechCrunch). Nvidia lost a half trillion in market cap overnight (WSJ). OpenAI's dominance suddenly looks fragile. The DeepSeek app has risen to #1 on the iOS app store (Mashable). Yet beneath the chaos lies a simple truth:

The rise of DeepSeek-R1 is not a crisis, but a triumph for the entire field.

DeepSeek's success is a testament to two forces often underestimated in Silicon Valley's closed-door labs: the power of open collaboration and the inevitability of efficiency gains. The company's decision to open-source DeepSeek-R1 and share its methodology—detailed in a transparent technical report—was a deliberate accelerant. "The open-source DeepSeek-R1… will benefit the research community to distill better smaller models," they state, framing their work as a collective stepping stone (arXiv). This ethos invites scrutiny, replication, and improvement. Meta will dissect its reinforcement learning techniques; OpenAI will benchmark its cost-performance ratios; and startups will graft their innovations into niche applications. The result? A rising tide that lifts all boats.

Critics warning of the "AI bubble popping" misunderstand economics (Barron's). Jevons Paradox—where efficiency gains spur demand, not curtail it—applies perfectly here. As models like DeepSeek slash inference costs and democratize access, new markets emerge. Small businesses priced out of OpenAI's API fees can now experiment. Developers in emerging economies can build locally relevant tools. The reality is: AI demand is elastic, and affordability unlocks scale (OReilly). The bubble isn't bursting—it's multiplying.

Of course, there are losers. The disruption is real, and the casualties are clear: closed-source AI labs like OpenAI and Google, once buoyed by proprietary data and compute monopolies, now face an existential reckoning. Their traditional moats—exclusive access to vast human-labeled datasets, sprawling GPU clusters—are crumbling. Why? Because DeepSeek's breakthroughs prove that scale alone isn't a defensible advantage. When a model can self-evolve robust reasoning capabilities without costly traditional SFT (supervised fine-tuning), the game changes. Nvidia, too, feels the shockwaves—its $0.5 trillion market cap loss reflects fears that cheaper, smarter models might reduce demand for brute-force compute. But this is a misread. Nvidia's pain is temporary; the true losers are those clinging to closed ecosystems in a world racing toward open, efficient innovation.

!DeepSeek training cost comparison

_DeepSeek said it cost $5.6m to train R1 (Forbes) -- roughly the same reported cost of Sam Altman's hypercar (Business Insider)._

DeepSeek's success lies in its advances over conventional training dogma. Instead of relying on armies of human annotators, it employs Group Relative Policy Optimization (GRPO), a reinforcement learning framework that rewards models for generating accurate, structured reasoning chains (arXiv). By using rule-based incentives (e.g., verifiable math answers, code that compiles) rather than neural reward models, GRPO sidesteps "reward hacking" and lets models autonomously refine their problem-solving strategies. The result? A base model that teaches itself to think longer, harder, and more logically—no SFT required. This isn't just a technical tweak; it's a paradigm shift. And because the approach is open-source, its impact will compound as labs worldwide iterate on it. Nvidia's hardware will rebound, but closed-source AI's hegemony? That era is over.

The lessons are clear. First, intelligent post-training strategies matter more than brute force. DeepSeek's GRPO algorithm exemplifies how targeted reinforcement learning—rewarding accuracy and format rigor—can coax base models into generating hyper-robust chains of thought. Second, business models must adapt to open-source realities. Take startups like Bread, which lets users finetune custom AI through natural conversation. Our groundbreaking technique thrives on the rising intelligence of open-source base models: as systems like DeepSeek advance, Bread's users can teach increasingly sophisticated models to handle nuanced, business-critical tasks—customer service protocols, supply chain optimizations—with nothing but iterative dialogue. This isn't tied to a single model; it's a symbiotic cycle. Each leap in foundational AI (DeepSeek today, another tomorrow) amplifies what end-users— marketers, engineers, clinicians—can "bake" into tailored solutions. The better the base reasoning, the more efficiently Bread converts casual instructions ("Be proactive with client follow-ups") into reliable, enterprise-grade behavior. By sharing breakthroughs, DeepSeek invites global iteration, not isolation.

There's a great irony here: the new standard for open AI collaboration comes from China, not the West. DeepSeek, a company in a country stereotyped as closed, has outpaced U.S. incumbents by embodying the transparency Silicon Valley once championed. Labs like OpenAI and Google, which pay lip service to "open" ideals while hoarding models, now face an existential irony: their dogma of closed superiority bred complacency. DeepSeek's rise isn't just technical—it's proof that monopolies stifle progress and openness, regardless of origin, is the real frontier.